- 1University of Illinois at Urbana-Champaign

- 2Zhejiang University

- 3University of Maryland, College Park

- * Equal Contribution

Abstract

Physical simulations produce excellent predictions of

weather effects. Neural radiance fields produce SOTA scene

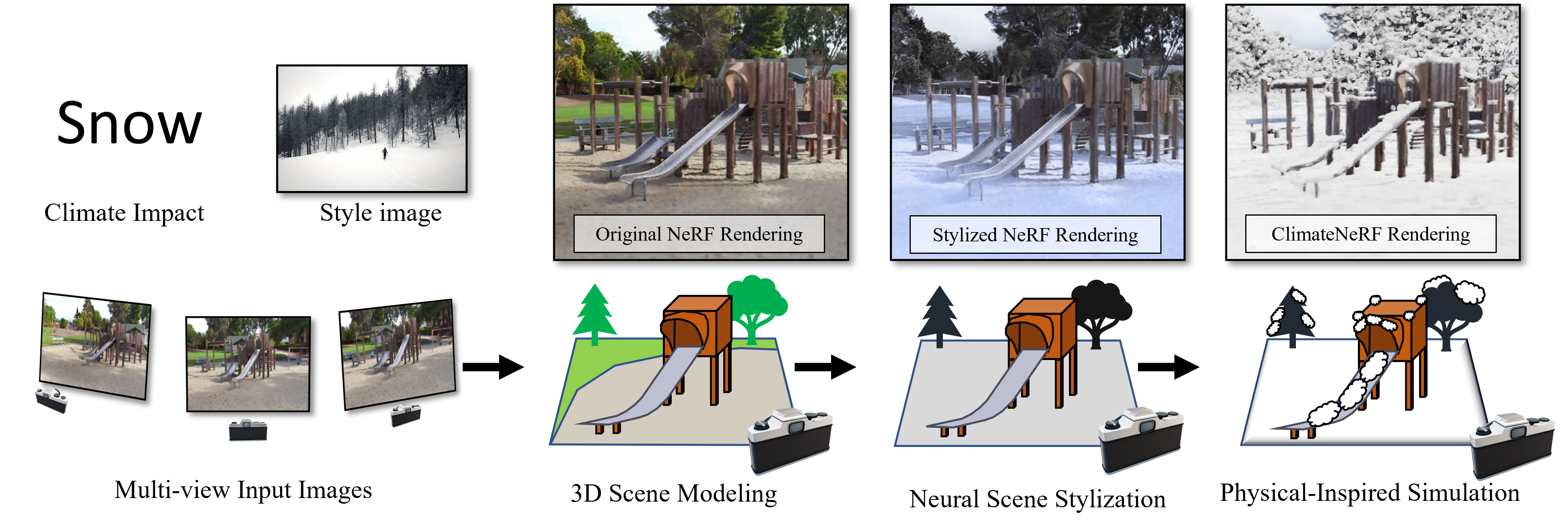

models. We describe a novel NeRF-editing procedure that

can fuse physical simulations with NeRF models of scenes,

producing realistic movies of physical phenomena in those

scenes. Our application -- Climate NeRF -- allows people to

visualize what climate change outcomes will do to them.

ClimateNeRF allows us to render realistic weather effects, including smog, snow, and flood. Results can be controlled with physically meaningful variables like water level. Qualitative and quantitative studies show that our simulated results are significantly more realistic than those from SOTA 2D image editing and SOTA 3D NeRF stylization.

ClimateNeRF allows us to render realistic weather effects, including smog, snow, and flood. Results can be controlled with physically meaningful variables like water level. Qualitative and quantitative studies show that our simulated results are significantly more realistic than those from SOTA 2D image editing and SOTA 3D NeRF stylization.

Weather simulations

* You can select different weather conditions on different scenes and compare

our method with baselines.

* 3D stylization denotes finetuning pre-trained NGP model using FastPhotoStyle.

Weather

Scene

Method

Rising Sea-level Simulation

Controllable rendering

Our method simulates different densities of smog and distinct heights of flood and accumulated

snow:

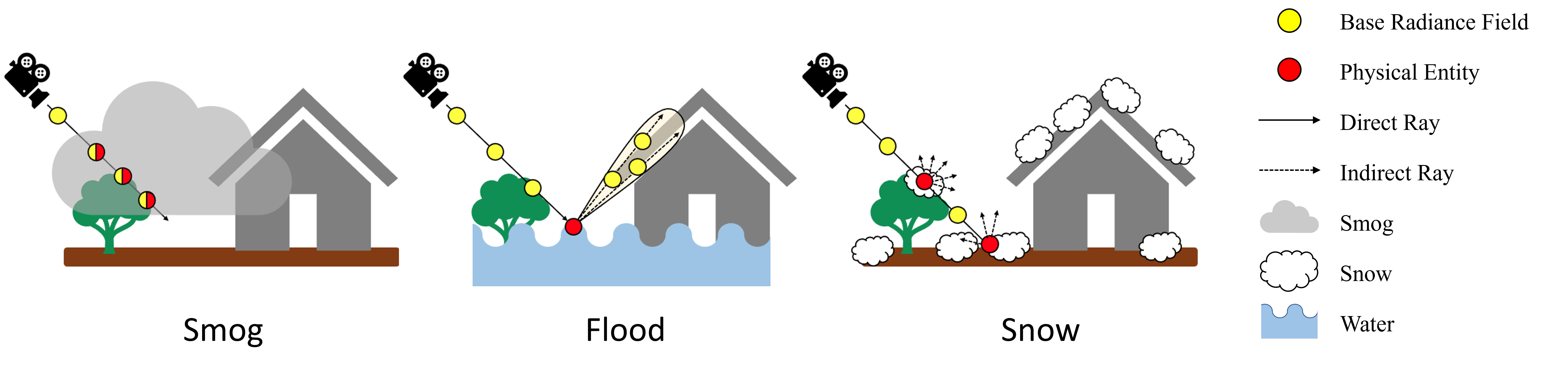

Rendering Procedure of ClimateNeRF

We first determine the position of physical entities (smog particle, snow balls, water surface)

with physical simulation. We can then render the

scene with desired effects by modeling the light transport between the physical entities and the

scene. More specifically, we follow

the volume rendering process and fuse the estimated color and density from 1) the original

radiance field (by querying the trained

instant-NGP model) and 2) the physical entities (by physically based rendering). Our rendering

procedure thus maintain the realism while achieving complex, yet physically plausible visual

effects.

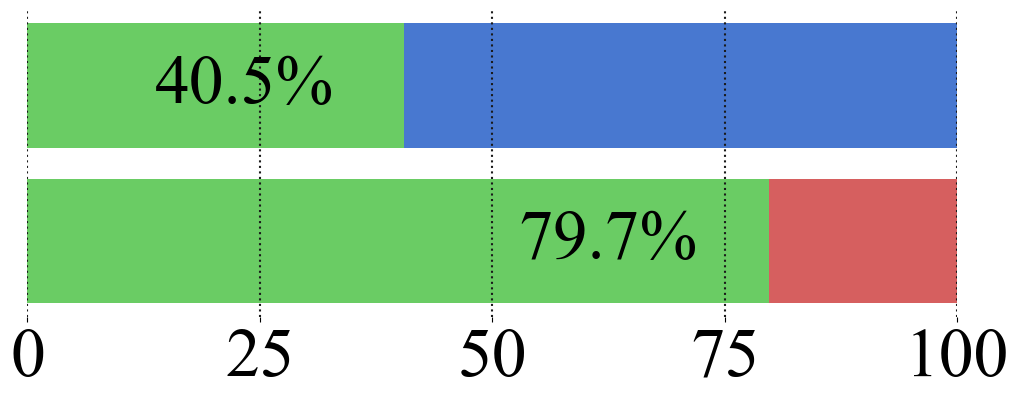

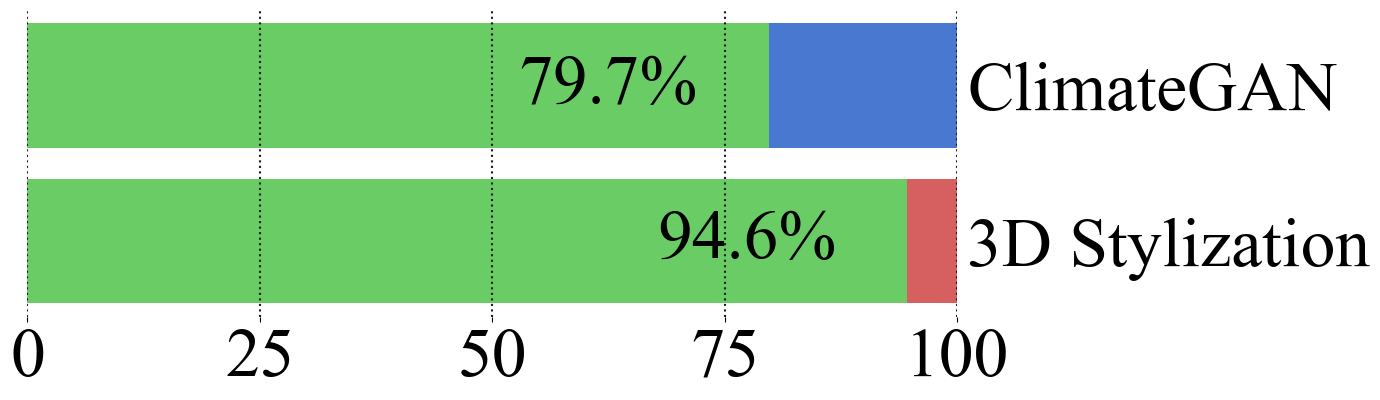

User Study

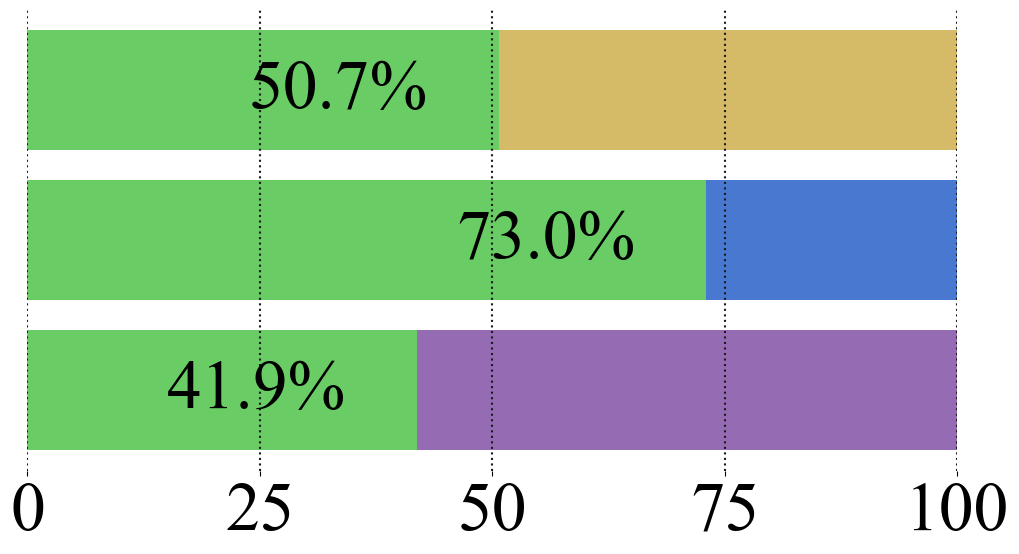

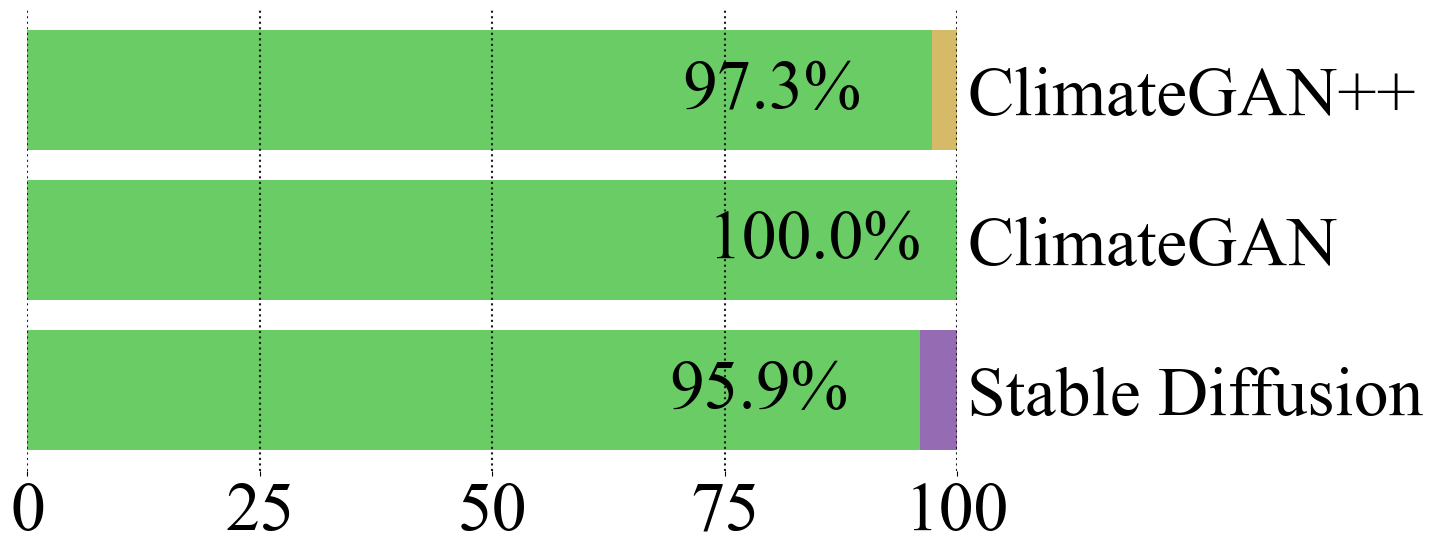

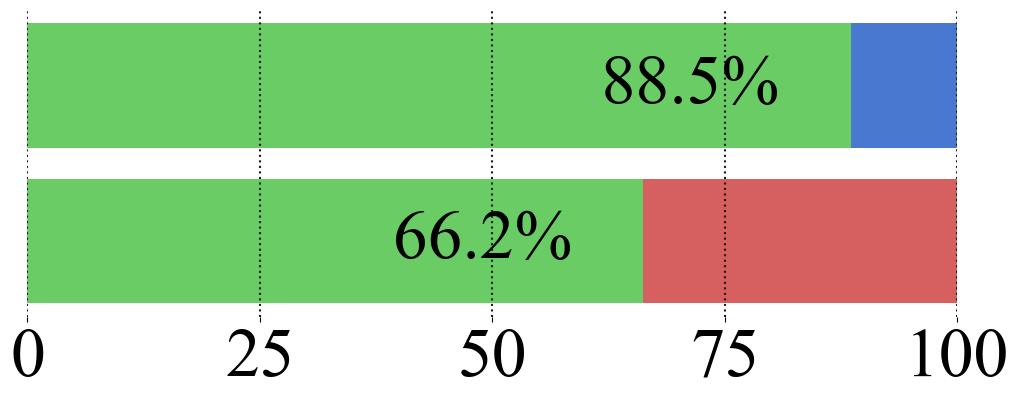

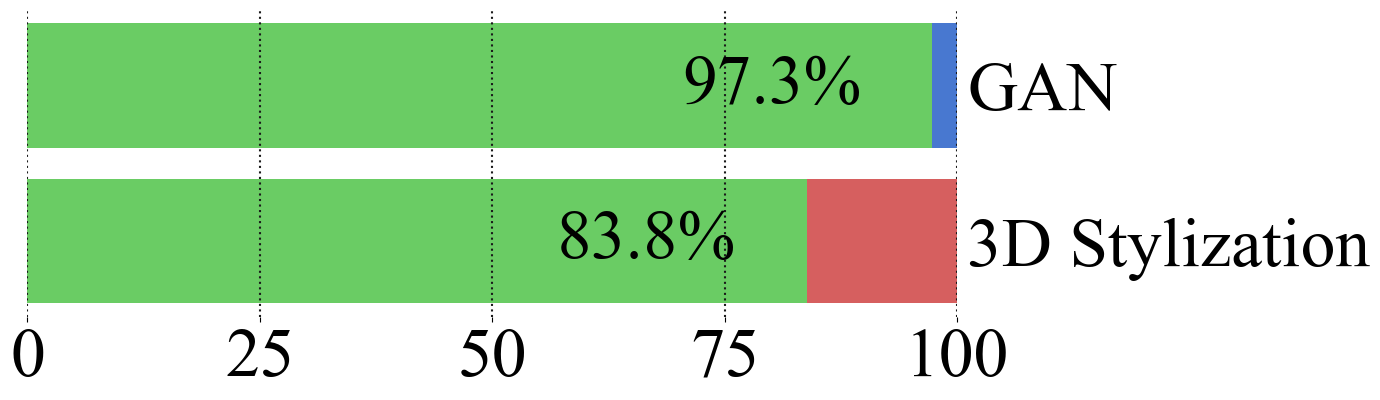

We perform a user study to validate our approach quantitatively. Users are asked to watch pairs

of

synthesized images or videos of the same scene and pick the

one with higher realism. 37 users participated in the study,

and in total, we collected 2664 pairs of comparisons.

| Images | Videos |

| Smog |

|

|

| Flood |

|

|

| Snow |

|

|

The length of bars indicates the percentage of users voting for higher realism than the

opponents. The green bar with the number shows our win rate against each baseline. The video

quality of our method significantly outperforms all baselines.

References

- Victor Schmidt, Alexandra Sasha Luccioni, M ́elisande Teng, Tianyu Zhang, Alexia Reynaud, Sunand Raghupathi, Gautier Cosne, Adrien Juraver, Vahe Vardanyan, Alex Hernandez-Garcia, Yoshua Bengio. Climategan: Raising climate change awareness by generating images of floods. ICLR, 2022. [code]

- Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj ̈orn Ommer. High-resolution image synthesis with latent diffusion models. In CVPR, 2022. [code]

- Taesung Park, Jun-Yan Zhu, Oliver Wang, Jingwan Lu, Eli Shechtman, Alexei Efros, and Richard Zhang. Swapping autoencoder for deep image manipulation. NeurIPS, 2020. [code]

Citation

If you find our project useful, please consider citing:AخA

@inproceedings{Li2023ClimateNeRF,

title={ClimateNeRF: Extreme Weather Synthesis in Neural Radiance Field},

author={Li, Yuan and Lin, Zhi-Hao and Forsyth, David and Huang, Jia-Bin and Wang, Shenlong},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2023}

}